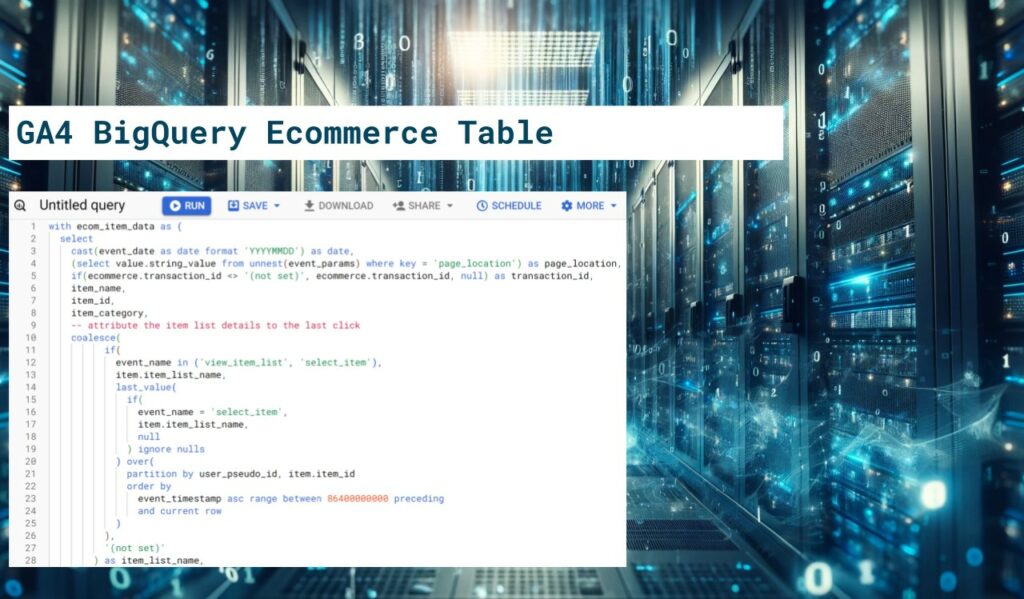

When you start working with GA4 data in BigQuery, you’ll hear that you should avoid connecting your dashboards directly to the raw data export tables. The reasons behind this include query performance and cost optimization. The need for optimizing your data sources is especially true when working with item-level ecommerce data.

In this post, I’ll explain how to create an aggregated ecommerce table that can be used as the data source for dashboards, for example, in Looker Studio.

As an additional bonus, the table will include item list attribution.

The old Universal Analytics had the data processing required for item list attribution built-in. However, getting item list-level views, click-through rates, and sales numbers in the GA4 UI requires that your site saves this information in the browser’s storage and includes it with every event from item_list_view to purchase.

It is not the ideal solution for the browser and its storage to be responsible for the attribution. Ideally, things that can be done outside of the browser would be done outside of the browser.

Luckily, we have the BigQuery export. If you have a proper ecommerce tracking setup in place, the events will contain all the required data for attribution. The SQL approach will also allow you to control the attribution window more efficiently and make later adjustments.

How to compose the query?

The query will include these steps:

- Extract the item-level ecommerce actions

- Apply item list attribution using the specified lookback window

- Group the results to reduce the number of rows in the final table

The result will be an item-level aggregated table optimized for your ecommerce dashboards.

with ecom_item_data as (

select

cast(event_date as date format 'YYYYMMDD') as date,

(select value.string_value from unnest(event_params) where key = 'page_location') as page_location,

if(ecommerce.transaction_id <> '(not set)', ecommerce.transaction_id, null) as transaction_id,

item_name,

item_id,

item_category,

-- attribute the item list details to the last click

coalesce(

if(

event_name in ('view_item_list', 'select_item'),

item.item_list_name,

last_value(

if(

event_name = 'select_item',

item.item_list_name,

null

) ignore nulls

) over(

partition by user_pseudo_id, item.item_id

order by

event_timestamp asc range between 86400000000 preceding

and current row

)

),

'(not set)'

) as item_list_name,

coalesce(

if(

event_name in ('view_item_list', 'select_item'),

item.item_list_index,

last_value(

if(

event_name = 'select_item',

item.item_list_index,

null

) ignore nulls

) over(

partition by user_pseudo_id, item.item_id

order by

event_timestamp asc range between 86400000000 preceding

and current row

)

),

'(not set)'

) as item_list_index,

if(event_name = 'view_item_list', item.quantity, 0) as view_item_list,

if(event_name = 'select_item', item.quantity, 0) as select_item,

if(event_name = 'view_item', item.quantity, 0) as view_item,

if(event_name = 'add_to_cart', item.quantity, 0) as add_to_cart,

if(event_name = 'begin_checkout', item.quantity, 0) as begin_checkout,

if(event_name = 'add_shipping_info', item.quantity, 0) as add_shipping_info,

if(event_name = 'add_payment_info', item.quantity, 0) as add_payment_info,

if(event_name = 'purchase', item.quantity, 0) as purchase,

item_revenue

from

`<project>.<dataset>.events_*`,

unnest(items) as item

where

(_table_suffix between cast(date_trunc(current_date(), year) as string format "YYYYMMDD")

and cast(current_date()-1 as string format "YYYYMMDD"))

and event_name in ("view_item_list","select_item","view_item","add_to_cart","begin_checkout","add_shipping_info","add_payment_info","purchase")

)

select

date,

transaction_id,

page_location,

data_is_final,

item_list_name,

item_list_index,

item_name,

item_id,

item_category,

sum(view_item_list) as view_item_list,

sum(select_item) as select_item,

sum(view_item) as view_item,

sum(add_to_cart) as add_to_cart,

sum(begin_checkout) as begin_checkout,

sum(add_shipping_info) as add_shipping_info,

sum(add_payment_info) as add_payment_info,

sum(purchase) as purchase,

sum(coalesce(item_revenue, 0)) as item_revenue

from

ecom_item_data

group by

date,

transaction_id,

page_location,

data_is_final,

item_list_name,

item_list_index,

item_name,

item_id,

item_categoryHow does the attribution work?

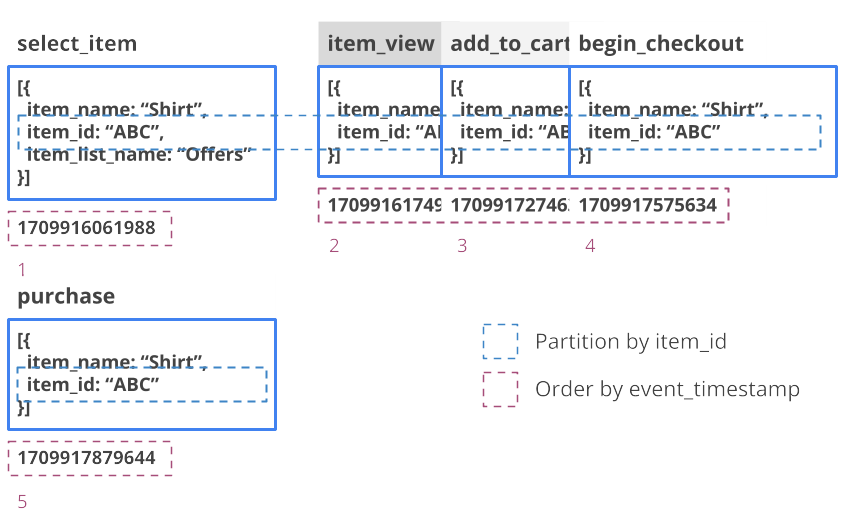

Item-list attribution is perhaps the most interesting part of the query. The beautiful illustration below is my best attempt at explaining how it, and BigQuery window functions generally, work.

The query expects the view_item_list and select_item events to include the item_list_name parameter. For the rest of the ecommerce events, the attribution is applied.

The attribution defines a window (the over clause) based on events that share the same user_pseudo_id and item_id, meaning that all events in the window contain the same item and are from the same user. In this window, the events are ordered by event_timestamp. The item_list_name of the last preceding select_item event is returned for each event.

Scheduling and automating table updates using Dataform

Below is the same query, this time implemented as an incremental table using Dataform.

config {

type: "incremental",

description: "Aggregated item-level ecommerce data source to be used in Looker Studio.",

schema: "ga4",

bigquery: {

partitionBy: "date_trunc(date, month)",

clusterBy: ["item_category", "item_name", "page_location", "item_list_name"]

},

tags: []

}

js {

const test = false; // used for testing with less data

// number of days used as the item list attribution lookback window

const listAttributionDays = 1;

// date range

const startDate = test ? `current_date()-1` : `date_checkpoint`;

const endDate = 'current_date()';

// the initial date filter, taking into account the lookback window

const dateFilterInitial = `(

(_table_suffix >= cast(${startDate}-${listAttributionDays} as string format "YYYYMMDD") and _table_suffix <= cast(${endDate} as string format "YYYYMMDD"))

or (_table_suffix >= 'intraday_'||cast(${startDate}-${listAttributionDays} as string format "YYYYMMDD") and _table_suffix <= 'intraday_'||cast(${endDate} as string format "YYYYMMDD"))

)`;

// final date filter, applied last

const dateFilter = `(date >= ${startDate} and date <= ${endDate})`;

// list of item fields to include

const ecomItemDimensions = [

'item_name',

'item_id',

'item_category',

];

// all fields included in the final group by

const groupByColumns = [

'date',

'transaction_id',

'page_location',

'data_is_final',

'item_list_name',

'item_list_index'

].concat(ecomItemDimensions);

// list of ecom events to include

// the query calculates item quantity for each event

const ecomEvents = [

'view_item_list',

'select_item',

'view_item',

'add_to_cart',

'begin_checkout',

'add_shipping_info',

'add_payment_info',

'purchase'

];

// a utility for calculating ecom event item quantities

const ecomActionColumns = ecomEvents.map(e => {

return `if(event_name = '${e}', item.quantity, 0) as ${e}`;

}).join(',\n ');

// a utility for aggregating the ecom metrics

const sumEcomEvents = ecomEvents.map(e => {

return `sum(${e}) as ${e}`;

}).join(',\n ');;

// the formula for attributing item-level fields

const attributeListDimension = (dimensionName) => {

return `coalesce(

if(

event_name in ('view_item_list', 'select_item'),

${dimensionName},

last_value(

if(

event_name = 'select_item',

${dimensionName},

null

) ignore nulls

) over(

partition by user_pseudo_id, item.item_id

order by

event_timestamp asc range between ${listAttributionDays*24*60*60*1000*1000} preceding

and current row

)

),

'(not set)'

)`;

};

}

with ecom_item_data as (

select

${ga4.eventDate} as date,

${ga4.unnestEventParam('page_location')} as page_location,

if(ecommerce.transaction_id <> '(not set)', ecommerce.transaction_id, null) as transaction_id,

${ecomItemDimensions.join(',\n ')},

-- attribute the item list details to the last click

${attributeListDimension('item.item_list_name')} as item_list_name,

${attributeListDimension('item.item_list_index')} as item_list_index,

-- tip: you can use the same formula to attribute other dimensions as well

${ecomActionColumns},

item_revenue,

if(

date_diff(current_date(), ${ga4.eventDate}, day) > 3,

true,

false

) as data_is_final,

from

`<project>.<dataset>.events_*`,

unnest(items) as item

where

${dateFilterInitial}

and event_name in (${ecomEvents.map(e => `"${e}"`)})

qualify

-- exclude duplicate data in case both the intraday and daily tables exist at the same time

-- credit: https://stuifbergen.com/2024/04/smart-incremental-ga4-tables-in-dataform/

dense_rank() over(

partition by regexp_extract(_table_suffix, r'[0-9]+') order by _table_suffix

) = 1

)

select

${groupByColumns.join(',\n ')},

${sumEcomEvents},

sum(coalesce(item_revenue, 0)) as item_revenue

from

ecom_item_data

where

${dateFilter}

group by

${groupByColumns.join(',\n ')}

pre_operations {

-- date checkpoint is based on the latest date that had "final" data

declare date_checkpoint default (

${

when(incremental(),

// incremental query starting point, based on the data_is_final flag

`select max(date)+1 from ${self()} where data_is_final = true`,

// date range start with full refresh, last 12 months of data

`select date_sub(current_date(), interval 1 year)`

)

}

)

---

-- delete rows that are about to be added again through the incremental refresh

${

when(incremental(),

`delete from ${self()} where date >= date_checkpoint`

)

}

}

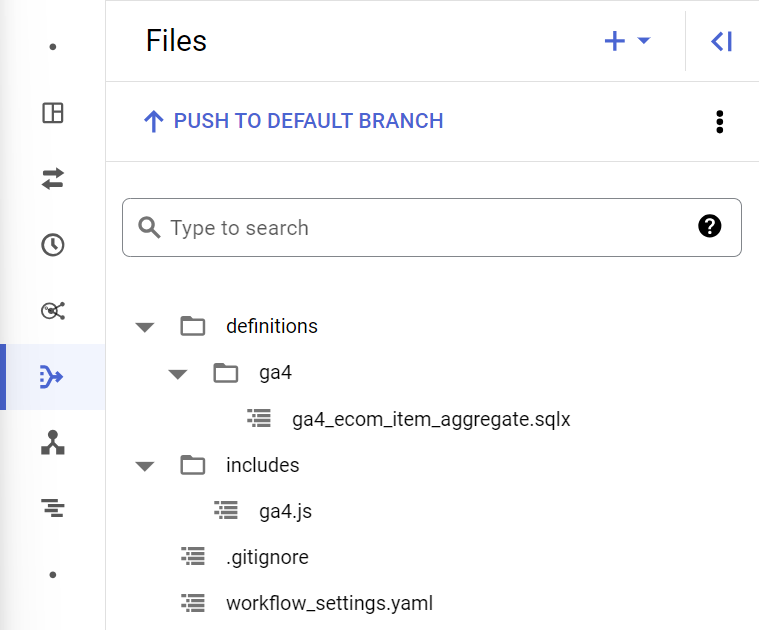

In addition to the JavaScript defined in the .sqlx file, the query references some general helper functions. Those are defined in the ga4.js file in the includes folder of the Dataform repository.

const eventDate = `cast(event_date as date format 'YYYYMMDD')`;

// unnest any parameter from the selected params array

const unnestParam = (keyName, paramsArray, dataType) => {

if (dataType) {

// return the value from the selected column

if (dataType === 'string') {

return `(select value.string_value from unnest(${paramsArray}) where key = '${keyName}')`;

} else if (dataType === 'int') {

return `(select value.int_value from unnest(${paramsArray}) where key = '${keyName}')`;

} else if (dataType === 'double') {

return `(select value.double_value from unnest(${paramsArray}) where key = '${keyName}')`;

} else if (dataType === 'float') {

return `(select value.float_value from unnest(${paramsArray}) where key = '${keyName}')`;

}

} else {

// return the value from the column that has data, cast as string

return `(select coalesce(value.string_value, cast(value.int_value as string), cast(value.double_value as string), cast(value.float_value as string)) from unnest(${paramsArray}) where key = '${keyName}')`;

}

};

// unnest a param from the event_params array

// returns the value of any data type if dataType param is not included

const unnestEventParam = (keyName, dataType) => {

return unnestParam(keyName, 'event_params', dataType);

}

module.exports = {

eventDate,

unnestEventParam,

}Once all set up, the contents of your Dataform repository should look something like this:

In addition to the raw SQL query presented earlier, the Dataform version includes additional logic for handling the incremental and full refreshes. Data in the GA4 export tables can receive updates for up to 72 hours. The query reflects that by flagging rows as “final” when they are over three days old. The “not final” rows in the destination table will always get deleted before new data is brought in. For more information about the incremental refresh logic, see my earlier post on incremental refresh techniques for Dataform.

Final thoughts

The example query provided in the post can serve as the starting point for building a proper ecommerce data source table for Looker Studio or other dashboarding tools. Most likely, the dimensions and metrics included in the example won’t be enough, and you’ll want to include data on traffic sources and additional item details, for example. Having the query in Dataform makes these kinds of adjustments much easier to make compared to a raw SQL + scheduled query approach.

Happy coding!

hi Taneli,

Thank you, this was a great content. I am getting the error Unrecognized name: _table_suffix at [62:4]. I wanted to ask if it is a problem related to me.

Hi Mehmet,

_table_suffix only works when you use a wildcard in the table selection.

This will throw an error:

SELECT * FROM `project.dataset.events_20240826` where _table_suffix = ‘20240101’

But this should work:

SELECT * FROM `project.dataset.events_*` where _table_suffix = ‘20240101’

If you don’t use a wildcard, you can remove the _table_suffix from the where clause.