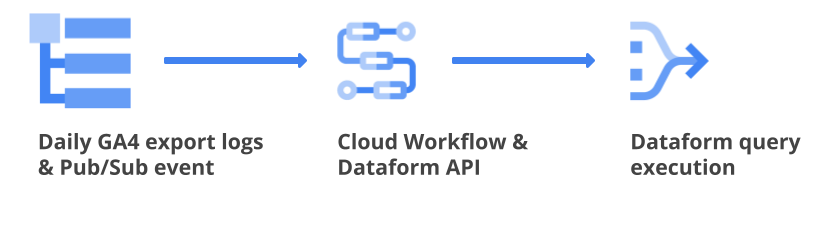

The daily BigQuery export schedules for GA4 are notoriously random. Sometimes the data is processed in the early-morning hours, sometimes at noon, and sometimes not even during the next day. This makes using a CRON job for accessing the daily GA4 export tables in Dataform very inconvenient.

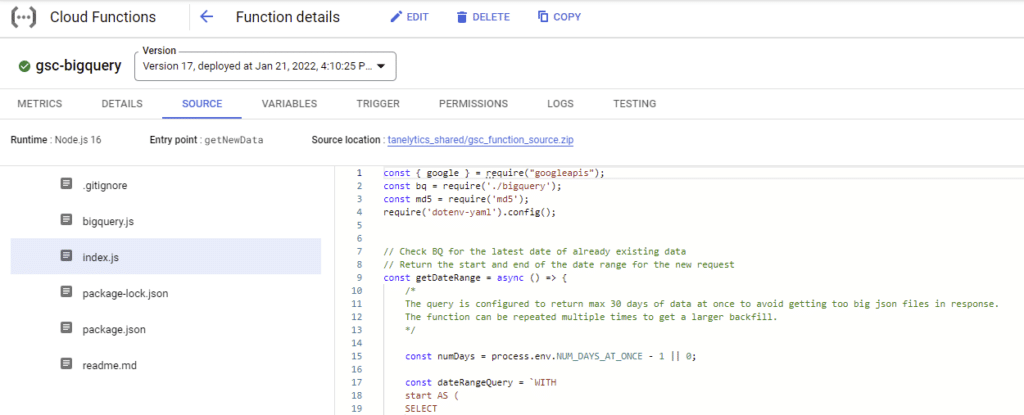

Luckily there’s a better option. By utilizing the Google Cloud Platform logs, you can trigger your Dataform queries at that exact moment when the daily data processing is complete.

In this article, I’ll walk you through how to set up a Cloud Workflow that compiles and executes the selected queries in your Dataform project. The Workflow will be triggered whenever the GCP logs indicate the daily GA4 export has happened.

As a bonus, I’ll also cover how you can set up a simpler CRON trigger for the Workflow using Cloud Scheduler.

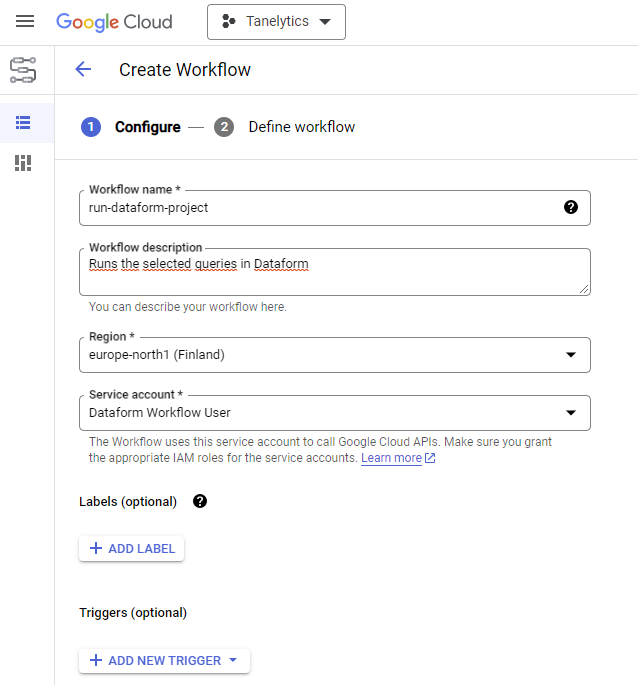

Configure the Workflow

Let’s start by configuring a Workflow that will trigger the run of the Dataform project with the selected queries. Start by heading to Workflows in Google Cloud and clicking the create button.

Give the Workflow a name and select a region where the Workflow will run. You will also need to select a service account that will be used for executing the workflow. If you don’t have the service account yet, you can create one now.

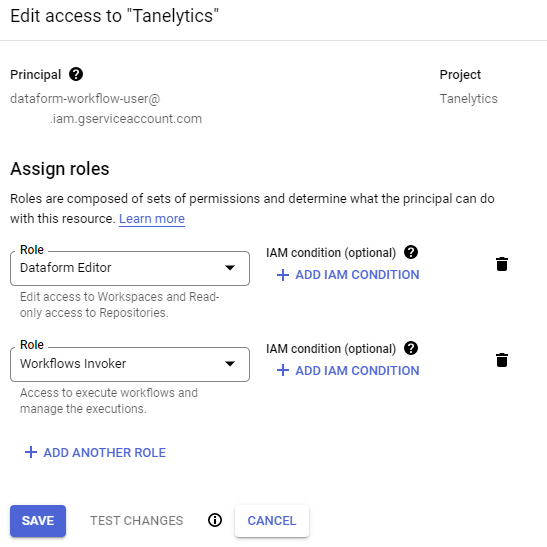

These roles will include the required permissions for the service account:

- Dataform Editor

- Workflows Invoker

You can leave the Workflow’s Triggers empty at this point. Continue by clicking next.

After that, you can configure the actual Workflow using the following code.

main:

steps:

- init:

assign:

- parent: projects/<google-cloud-project-id>/locations/<dataform-location>/repositories/<dataform-name>

- createCompilationResult:

call: http.post

args:

url: ${"https://dataform.googleapis.com/v1beta1/" + parent + "/compilationResults"}

auth:

type: OAuth2

body:

gitCommitish: main

result: compilationResult

- createWorkflowInvocation:

call: http.post

args:

url: ${"https://dataform.googleapis.com/v1beta1/" + parent + "/workflowInvocations"}

auth:

type: OAuth2

body:

compilationResult: ${compilationResult.body.name}

invocationConfig:

fullyRefreshIncrementalTablesEnabled: false

includedTags: ["ga4"]

includedTargets: []

transitiveDependenciesIncluded: true

transitiveDependentsIncluded: false

result: workflowInvocation

- complete:

return: ${workflowInvocation.body.name + " complete"}The Workflow includes two Dataform API calls: The first will compile the queries in your Dataform repository, and the second will execute the included queries.

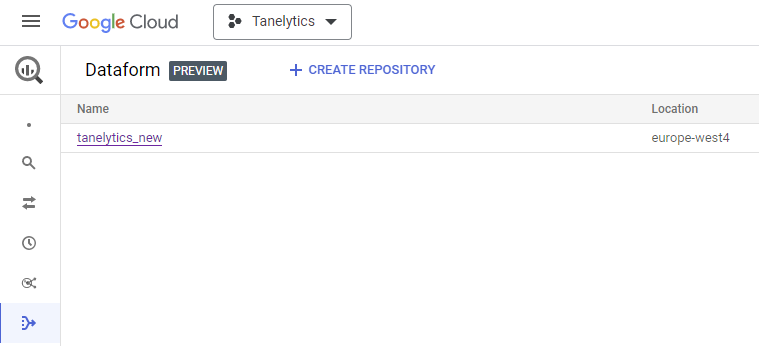

Enter the details of your Dataform repository into the parent variable in the Workflow. You can find the details in your Dataform project.

Note: As you can see, the Workflow is using the v1beta version of the Dataform API. This should require a change at some point.

The settings for the Dataform execution are defined in the invocationConfig. The example config I’ve included will run all queries that have the “ga4” tag. Additionally, the run will include any queries that have been declared as dependencies to the “ga4” queries.

Option 1: Set up the Pub/Sub trigger

Note: If you are looking to set up a CRON trigger, you can skip this part.

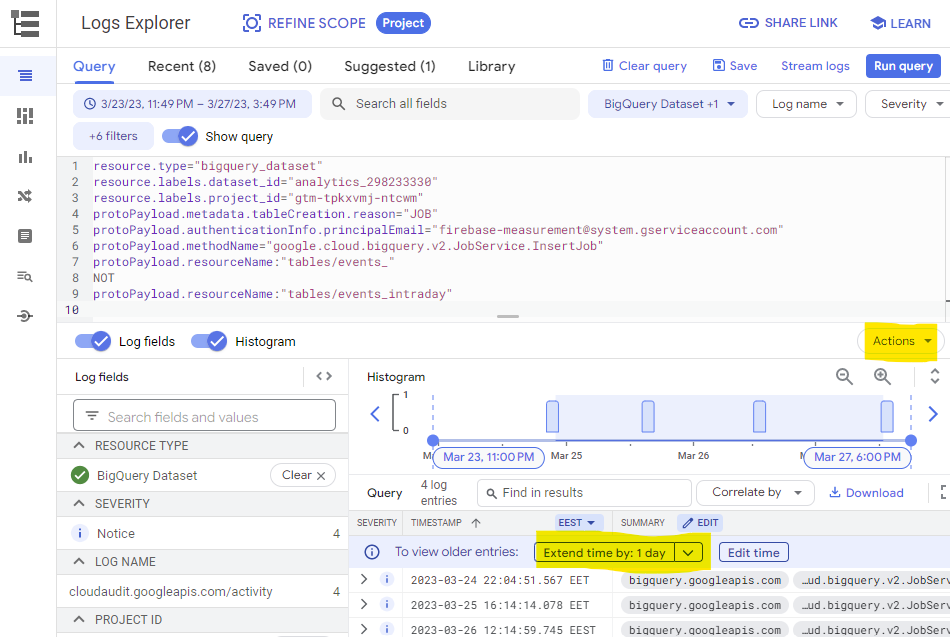

Start by heading to Cloud Logging. We will use the logs to identify when the daily GA4 BigQuery export happens.

Use the following query to see when new daily GA4 events tables have been created last. Remember to extend the time range enough to be able to see the logs. The query should return one log per table created.

resource.type="bigquery_dataset"

resource.labels.dataset_id="<ga4-dataset-id>"

resource.labels.project_id="<google-cloud-project-id>"

protoPayload.metadata.tableCreation.reason="JOB"

protoPayload.authenticationInfo.principalEmail="firebase-measurement@system.gserviceaccount.com"

protoPayload.methodName="google.cloud.bigquery.v2.JobService.InsertJob"

protoPayload.resourceName:"tables/events_"

NOT

protoPayload.resourceName:"tables/events_intraday"The above query will only catch the table creation logs for the daily events tables. However, the tables’ contents could also get updated at a later time.

GA4 allows sending in events with a 72-hour delay, which is also reflected in the BigQuery export. If you are sending in events with a long delay, you can expect the tables’ contents to change after the initial creation.

The following version of the query will include all log events of when the data of an events table changed.

resource.type="bigquery_dataset"

resource.labels.dataset_id="<ga4-dataset-id>"

resource.labels.project_id="<google-cloud-project-id>"

protoPayload.metadata.tableDataChange.reason="JOB"

protoPayload.authenticationInfo.principalEmail="firebase-measurement@system.gserviceaccount.com"

protoPayload.methodName="google.cloud.bigquery.v2.JobService.InsertJob"

protoPayload.resourceName:"tables/events_"

NOT

protoPayload.resourceName:"tables/events_intraday"

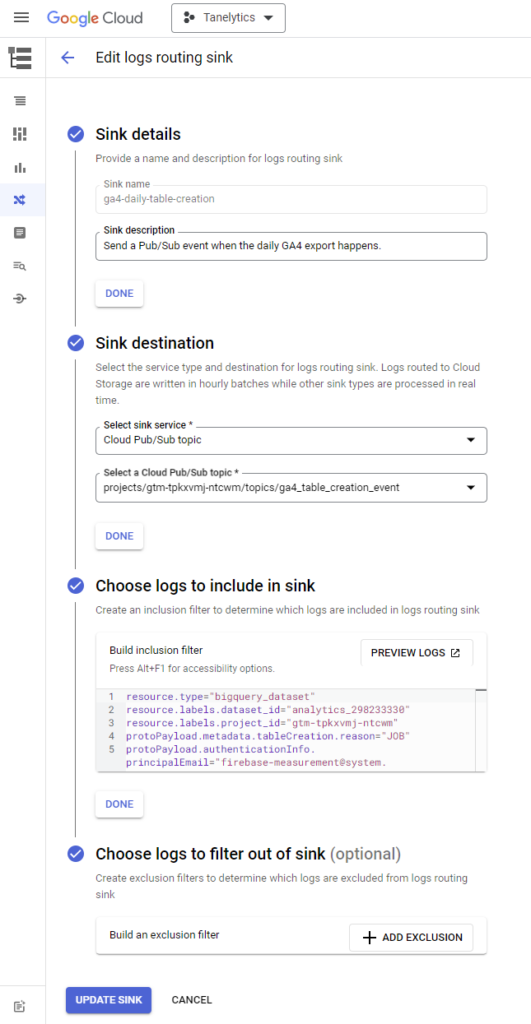

Once you’ve verified the query, you can use it to create a sink. Click on Actions -> Create sink and proceed to configure the sink’s details.

Select Cloud Pub/Sub topic as the sink service. After that, you can choose to create a new topic. This Pub/Sub topic will receive the event whenever a table creation is catched by the logs routing sink.

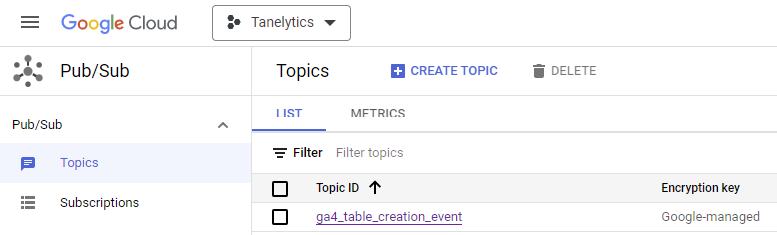

After completing the setup, you should see the newly created topic in Pub/Sub.

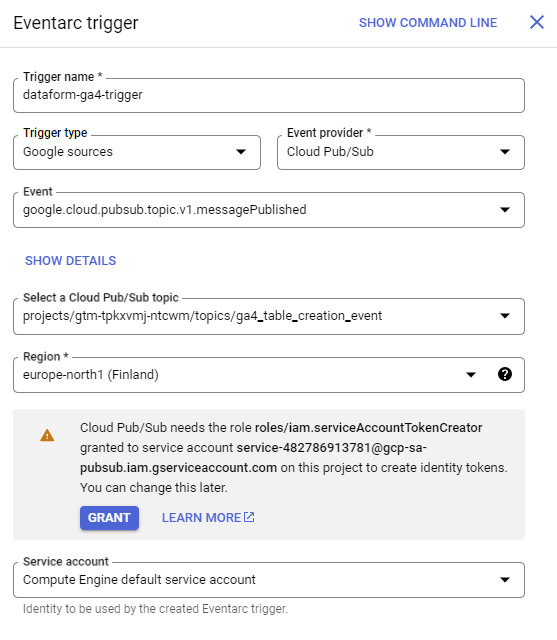

Once you’ve created the Pub/Sub event, you can head back to Workflows to include it as a trigger for the Workflow created earlier. Configure the existing Workflow and click add new trigger. Select the Eventarc trigger type.

Select Cloud Pub/Sub as the event provider. Then, for the event, select the direct messagePublished event. After that, you can select the topic that was set up earlier.

The region should match the region that you selected when creating the Workflow initially.

You will most likely also be notified that the GCP Pub/Sub service account requires the serviceAccountTokenCreator role. That can be done easily by just clicking grant.

After you’ve saved the trigger, you can click next and deploy the Workflow. The Workflow setup is now complete.

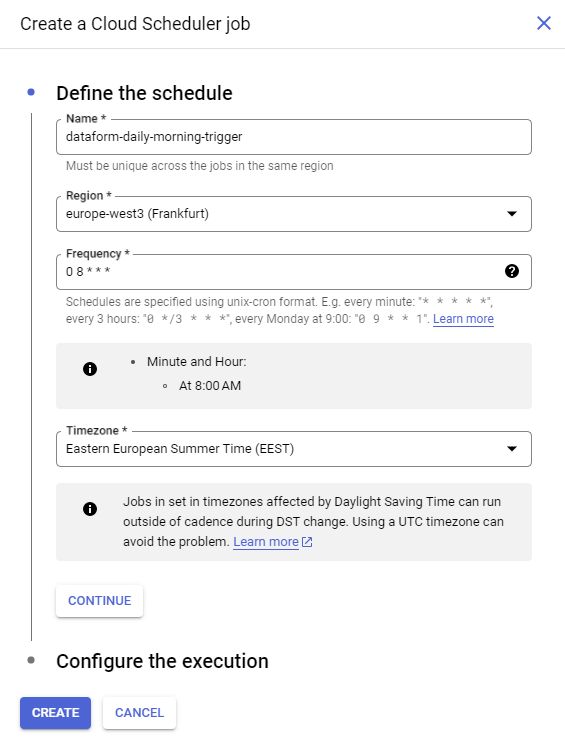

Option 2: Set up a CRON trigger

The configuration for a scheduled trigger is a lot simpler than the Pub/Sub trigger. Start by navigating to your Workflow and clicking edit. From there you can add a new trigger. Choose Cloud Scheduler.

In the trigger you can define a schedule using the CRON format. The one I’ve defined in the example is set to trigger every day at 8 AM.

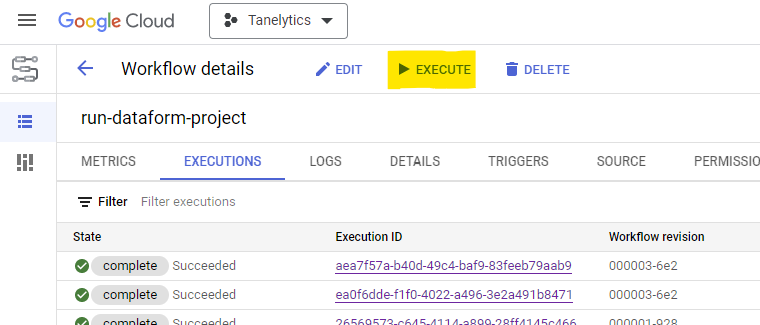

Test the setup

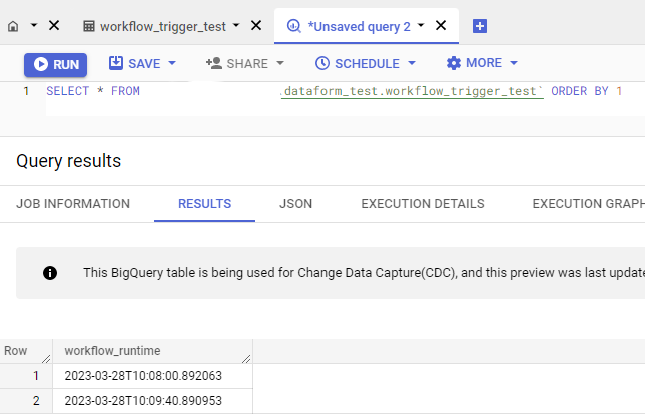

Below is a simple query for testing the new Workflow in Dataform. The query will log the Workflow’s runtime into an incremental table. You can use it to test if the Workflow executes successfully.

config {

type: "incremental",

description: "Test the Cloud Workflows trigger.",

schema: "dataform_test",

tags: ["ga4"]

}

select

current_datetime() as workflow_runtime

If you are too eager to wait for the next GA4 daily export, you can also trigger the Workflow manually.

After running the workflow, you should always see a new timestamp appended to the test table.

Summary

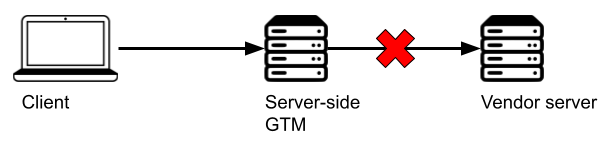

The GA4 BigQuery export happens at random times and can have long delays, making using the processed data inconvenient. One way to get the processed data in use as early as possible is to create a log-based trigger for Dataform.

Unfortunately, the daily export delay will remain even with the log-based trigger. So, the next action is to start utilizing the real-time export data from the intraday tables. Merging the daily and real-time tables will make the queries more complex. But, on the other hand, the triggering will be more straightforward, as a basic CRON job will do the work just fine.

If you want to explore the merging of daily and real-time tables, also check out my post on Dataform incremental refresh strategies.

Hi Taneli, thank you very much for your always brilliant contribution.

so the only option available to process permanent data is to query exports with data = current_date -3?

Hi!

Yes, the 72 hours is the maximum delay for event data to get processed. So after that, the data would be quaranteed to not change anymore.

However, I haven’t seen the data get changed in a “normal” setup after the initial daily processing. I think the changes will only happen if you send in data with a delay.

So the daily processed data should be good as soon as it comes in, in most cases.

Can I do this with streaming data (intraday) from GA4?

I think with the intraday table, there’s no need for a table creation trigger like this for the queries. New data is being added continuously to the intraday table without the table being recreated every time.

My other blog post has some tips for how to work with the intraday data: https://tanelytics.com/incremental-refresh-techniques-for-dataform-ga4